Resiliency is a goal. I’m not sure if we ever actually reach it.

In my configuration, I’ve decided that the loss of a single node should be tolerated. This means that any hardware failure that takes a node of line is considered to be within the redundancy tolerance of the data center.

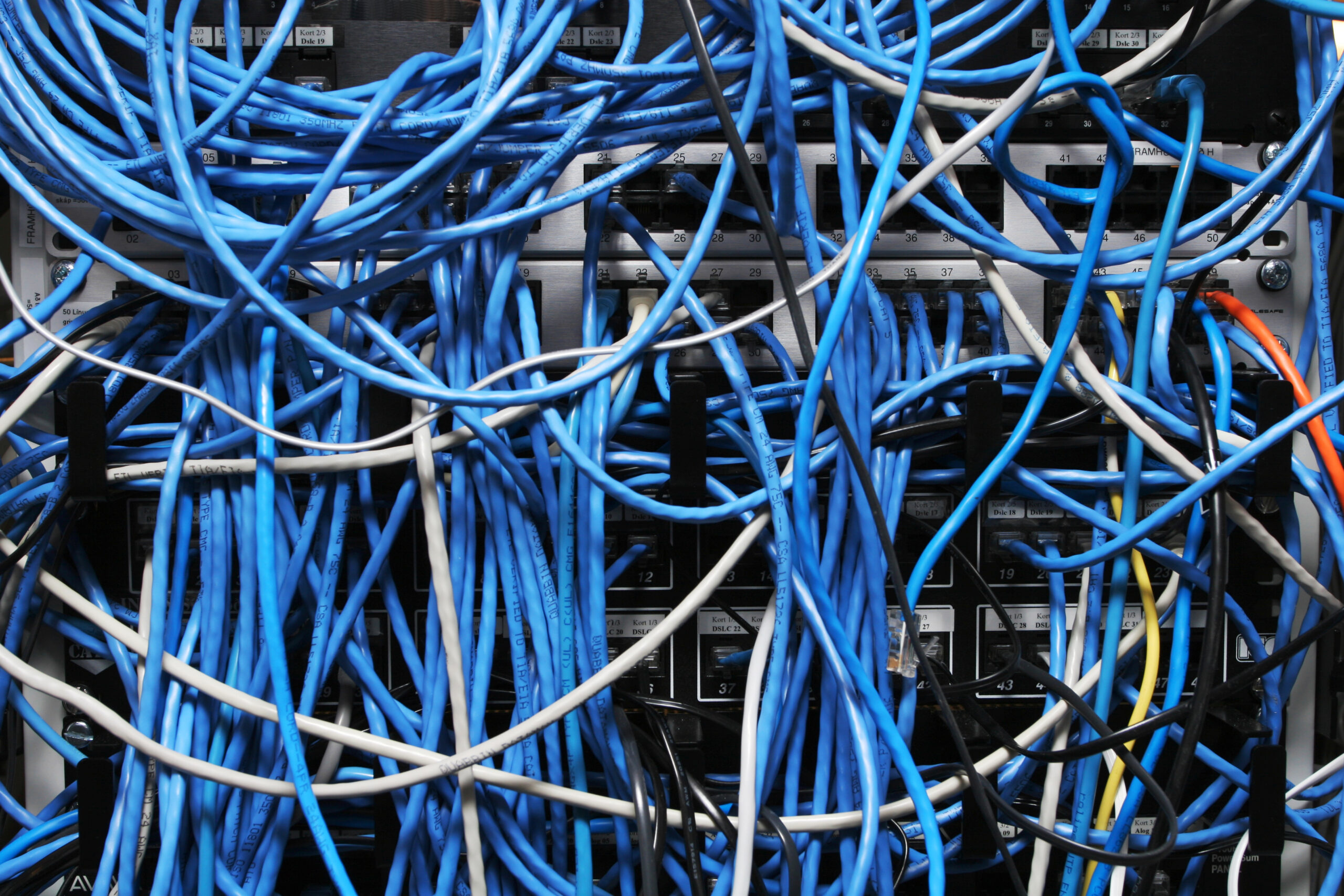

This means that while every node has at least two network interfaces, I am not going to require separate PSUs with dual NIC’s, each with two 10Gbit interfaces. Instead, each node has two 10Gbit interfaces and a management port at 1 to 2.5 gigabits RJ45 copper.

Each node is connected to two switches. Each switch has a separate fiber, run via a separate path, back to a primary router. Those primary routers are cross connected with two fibers, via two different paths.

Each of the primary routers has a fiber link to each of the egress points. I.e., two paths in/out of the DC.

The NAS is a distributed system where we can lose any room and not lose access to any data. We can lose any fiber, and it will have NO effect on the NAS. We can lose any switch and not have it affect the NAS.

We can lose any one router and not impact the NAS.

So far, so good.

Each compute node (hypervisor and/or swarm member) is connected to the NAS for shared disk storage. Each compute node is part of the “work” OVN network. This means that the compute nodes are isolated from the physical network design.

Our load balancer runs as a virtual machine with two interfaces, one is an interface on the physical network. The other is on the OVN work network.

This means that the VM can migrate to any of the hypervisors with no network disruption. Tested and verified. The hypervisor are monitored, if the load balancer becomes unavailable, they automaticity reboot the load balancer on another hypervisor.

So what’s the issue?

That damn Load Balancer can’t find the workers if one specific node goes down. The LB is still there. It is still responding. It just stops giving answers.

I am so frustrated.

So I’m going to throw some hardware at it.

We’ll pick up a pair of routers running pfSense. pfSense will be augmented with FRR and HAProxy to provide load balancing.

Maybe, just maybe, that will stabilize this issue.

This is a problem I will be able to resolve, once I can spend time running diagnostics without having clients down.