Learning new things

Another deranged asshole killed children at a school. 2 dead, 17 wounded. Nationwide headlines. The blood vultures leap to blame me for a shooting that took place more than a 1000 miles awy.

Meanwhile, CBS News is running a headline on August 28, 2025: “6 dead, 27 hurt in Chicago weekend shootings, police say.”

6 dead, 27 hurt in Chicago weekend shootings, police say

I would rather not deal with it today.

OpenStack

Over the last month, I’ve been dealing with somebody who has not kept up with the technology he is using. It shows. I like to learn new things.

For the last two years I’ve been working with two major technologies. Ceph and Open Virtual Networks. Ceph I feel I have a working handle on. Right now my Ceph cluster is down because of network issues, which I did to myself. OVN is another issue entirely.

A group of people smarter than I looked at networking and decided that instead of doing table lookups and then making decisions based on tables, they would create a language for manipulating the flow of packets, called “OpenFlow.”

This language could be implemented on hardware, creating very fast network devices. Since OpenFlow is a language, you can write routing functions as well as switching functions into the flows. You can also use it to create virtual devices.

The two types of virtual devices are “bridges” and “ports.” Ports are attached to bridges. OpenFlow processes a packet received on a port, called ingress, to move the packet to the egress port. There is lots going on in the process, but that is the gist.

The process isn’t impossible to do manually, but it isn’t simple, and it isn’t easy to visualize.

OVN adds virtual devices to the mix, allowing for simpler definitions and more familiar operations.

With OVN you create switches, routers, and ports. A port is created on a switch or router, then attached to something else. That something else can be virtual machines, physical machines, or the other side of a switch-router pair.

This is handled in the Northbound (NB) database. You modify the NB DB, which is then translated into a more robust flow language, which is stored in the Southbound (SB) database. This is done with the “ovn-north” process. This process keeps the two databases in sync with each other. Modifications to the NB DB are propagated into the SB DB and vice versa.

All of this does nothing for your actual networking. It is trivial to build all of this and have it “work.”

The thing that has to happen is that the SB database has to connect to the OpenvSwitch (OVS) database. This is accomplished via ovn-controller.

When you introduce changes to the OVS database, they are propagated into the SB database. In the same way, changes to the SB database cause changes to the OVS database.

When the OVS database is modified, new OpenFlow programs are created, changing the processing of packets.

To centralize the process, you can add the address of a remote OVN database server to the OVS database. The OVN processes read this and self-configure. From the configuration, they can talk to the remote database to create the proper OVS changes.

I had this working until one of the OVN control nodes took a dump. It took a dump for reasons, most of which revolved around my stupidity.

Because the cluster is designed to be self-healing and resilient, I had not noticed when two of the three OVN database servers stopped doing their thing. When I took that last node down, my configuration was stopped.

I could bring it back to life, but I’m not sure whether it is worth the time.

Now here’s the thing: everything I just explained comes from two or three very out-of-date web pages that haven’t been updated in many years. They were written to others with some understanding of the OVS/OVN systems. And they make assumptions and simplifications.

The rest of the information comes from digging things out of OpenStack’s networking component, Neutron.

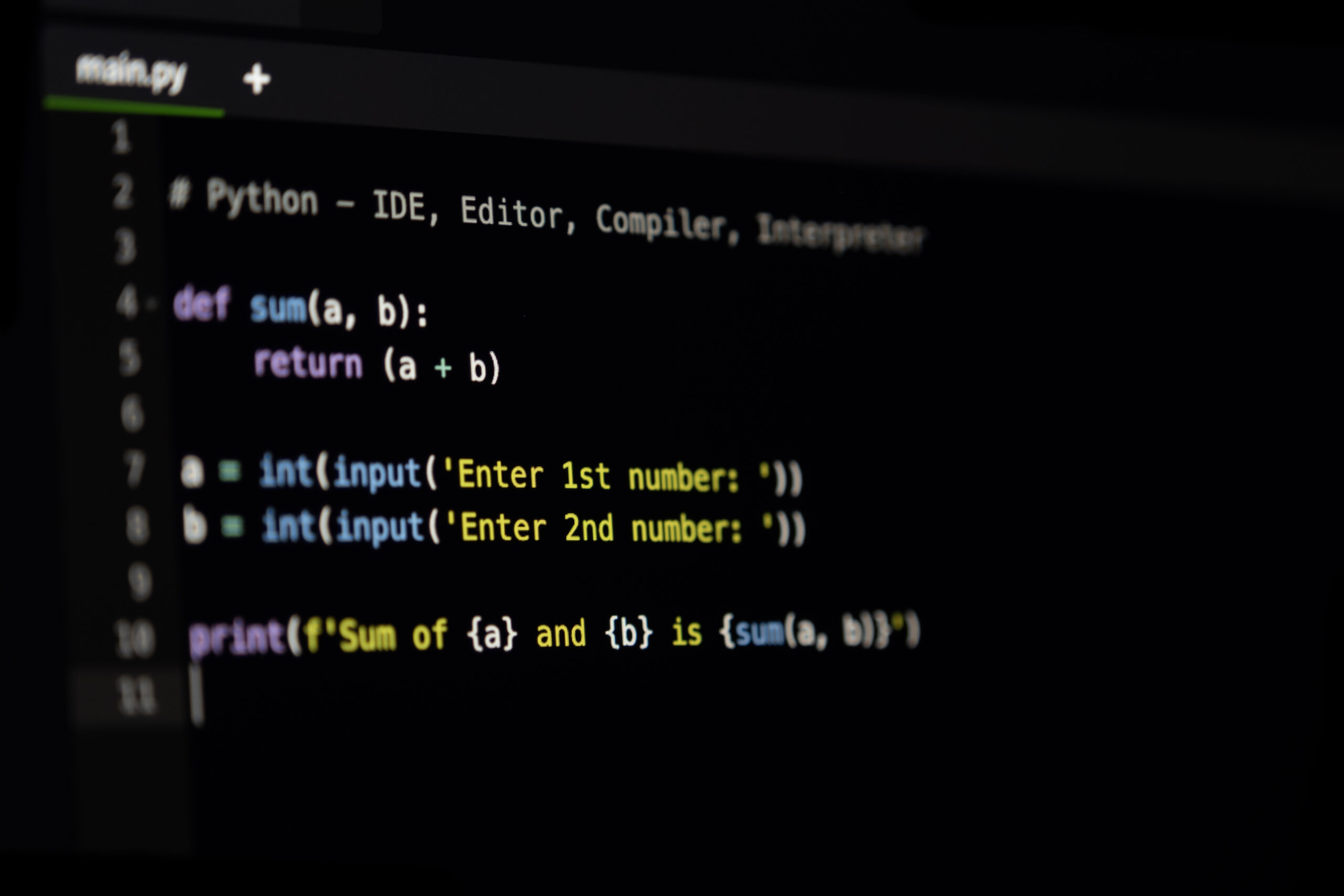

I have a choice: I can continue down the path I am currently using, or I can learn OpenStack.

I choose to learn OpenStack.

First, it is powerful. With great power comes an even greater chance to mess things up. There are configuration files that are hundreds of lines long.

There are four components that I think I understand. The identity manager, Keystone. This is where you create and store user credentials and roles. The next is the storage component, Glance. This is where your disk images and volumes are accessed. Then there is the compute component, named Nova, which handles building and configuring virtual machines. Finally there is the networking component, called neutron.

For the simple things, I actually feel like I have it mostly working.

But the big thing is to get OVN working across my Ceph nodes. That hasn’t happened.

So for today, I’ll dig and dig some more, until I’m good at this.

Then I’ll add another technology to my skill set.