Networking should be simple. Even when it was big, it was simple. Plug the wires in correctly, assign the IP address your system administrator gave you, and you are up and running on the internet.

We built each node on the net to be able to withstand attacks. Each node was a fortress.

But when we put Win95 machines on the net, that changed.

The mean time to having a Win95 machine compromised was less than 72 hours.

Today, an unhardened Windows box has about an hour before it is compromised. Many IoT devices have windows in the 5 minute range.

To “fix” this issue, we introduced firewalls. A firewall examines every packet that enters, deciding if the packet should be allowed forward.

Since everything was in plain text, it was easy to examine a packet and make decisions. This “fixed” the Windows Vulnerability issue.

The next complication came about because Jon Postel didn’t dream big enough. His belief was that there would never be more than a few thousand machines on the Internet.

This was an important argument as it shaped the new Internet Protocol. He wanted 2 bytes (16 bits) for host addressing. Mike wanted more. He argued that there would be 100s of thousands of machines on the Internet.

They compromised on a 4 byte, 32 bit address, or around 4 billion addresses. But since the address space was going to be sparse, the actual number would be less than that. Much less than that.

This meant that there was a limit on the number of networks available at a time when we needed more and more networks.

Add to that, we had homes that suddenly had more than one device on the Internet. There were sometimes two or even three devices in a single home.

Today, a normal home will have a dozen or more devices with an internet address within their home.

This led to the sharing of IP addresses. This required Network Address Translation.

stateDiagram-v2 direction LR classDef outside fill:#f00 classDef both fill:orange classDef inside fill:green Internet:::outside --> DataCenter DataCenter:::outside --> Firewall Firewall:::both --> Server class Server inside

Here we see that we have an outside world which is dangerous red. The Firewall exists on both and creates safety for our Server in green.

stateDiagram-v2

direction LR

classDef outside fill:#f00

classDef both fill:orange

classDef inside fill:green

Internet:::outside --> DataCenter

DataCenter:::outside --> Firewall

Firewall:::both --> LoadBalancer

state LoadBalancer {

Server1

Server2

}

class LoadBalancer inside

Server1 and Server2 are part of the compute cluster. The load balancer sends traffic to the servers in some balanced way.

stateDiagram-v2

direction LR

classDef outside fill:#f00

classDef both fill:orange

classDef inside fill:green

Internet:::outside --> DataCenter

DataCenter:::outside --> Firewall

Firewall:::both --> LoadBalancer

state LoadBalancer {

Ingress1 --> Server1

Ingress2 --> Server2

Server1 --> Compute1

Server1 --> Compute2

Server1 --> Compute3

Server2 --> Compute1

Server2 --> Compute2

Server2 --> Compute3

}

class LoadBalancer insideThe firewall sends traffic to the load balancer. The load balancer sends traffic in a balanced fashion to Ingress 1 or Ingress 2. This configuration means that either Ingress 1 or Ingress 2 can be go offline and the cluster continues to work.

The actual structure is that the Ingress process runs on the different servers. It is normal to have 3 ingress processes running on 3 servers, with more servers hosting other processes.

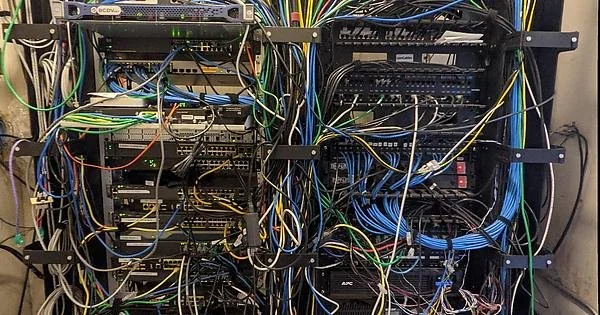

So what’s so complicated? What’s complicated is that each of the devices in that path must be configured correctly. Which gets more complex than it should be.

The path packets travel is configured by routing configurations. This is done by BGP outside the Data Center and OSPF inside the Data Center. The Firewall must be configured to only pass the traffic it is supposed to.

Firewall rules grow and can be complex. My firewall rules exist as “If it ain’t broken, don’t fix it” It is always a concern when modifying firewall rules. It is not unheard of to lock yourself out of your firewall. Or to bring down a thousand sites from one bad configuration rule in a firewall.

The load balancer must also be configured correctly. In our case, our load balancers offload SSL/TLS work to allow routing decisions. It then uses internal SSL/TLS for all traffic within the cluster.

The Ingress processes live on a virtual network for intra-cluster communications and on the load balancer network for communications with the load balancers.

Each of the compute instances communicates on the intra-cluster network only.

All of this is wonderful. Until you start attempting to figure out how to get the correct packets to the correct servers.

The firewall is based on pfSense. The load balancer is based on HAProxy. The ingress services are provided by Nginx. The intra-cluster networking and containerizing is provided by docker/K8S.

The issue of the day, if I upload large files via the load balancer, it fails. Implying that HAProxy is the issue. Uploading to the ingress services directly works.

Frustration keeps growing. When will it get easy?

Amazing how complexity can blossom from combinations of nominally simple things, isn’t it?